Export Azure Logic App Run History | Performance Analysis

Azure Logic Apps are a powerful tool for automating workflows and integrating various services in the cloud. However, one of the challenges users often face is analyzing the performance and run history of their Logic Apps. The Azure portal does provide a way to view the run history of Logic Apps, but it lacks a direct method for exporting this data for further analysis, such as performance benchmarking or troubleshooting recurring issues.

In this blog post, I’ll walk you through the steps to export Azure Logic App run history using Azure Log Analytics. We’ll discuss why this is important, the prerequisites you need to set up, and how to use a Kusto Query Language (KQL) query to extract the data you need.

Why Export Logic App Run History?

The Azure portal allows you to view the run history of your Logic Apps, but it doesn’t provide a built-in feature to export this data. This limitation can be problematic when you want to analyze the performance of your workflows over time, identify patterns, or simply keep a historical record of runs for audit purposes.

Exporting the run history to Excel or another data analysis tool allows you to:

- Perform deeper analysis: With the data in a spreadsheet, you can apply formulas, create charts, and use pivot tables to analyze trends and performance metrics.

- Troubleshoot issues: By examining run history in detail, you can identify patterns or anomalies that might indicate underlying problems.

- Keep a historical record: The default run history retenion is 90 days. You can pull this data and keep it for history, compliance and auditing purposes.

Prerequisites

Before we dive into the solution, there are a few prerequisites you need to have in place:

- Azure Logic App: Ensure you have a Logic App already set up and running in your Azure environment.

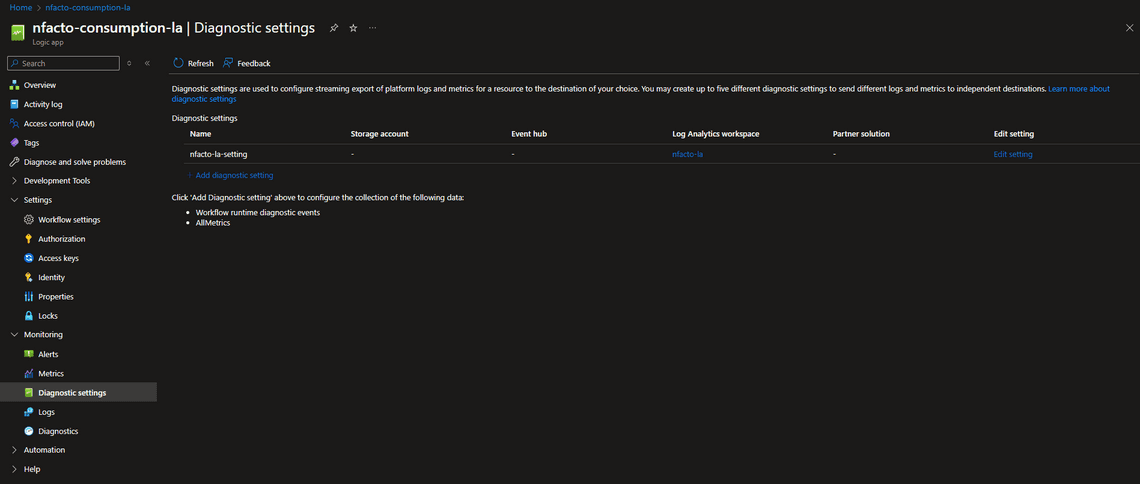

- Log Analytics Workspace: Your Logic App should be connected to a Log Analytics workspace. This connection allows you to store and query the telemetry data generated by your Logic App.

Once these prerequisites are in place, you’re ready to start querying the run history of your Logic App.

Querying Logic App Run History with Kusto Query Language (KQL)

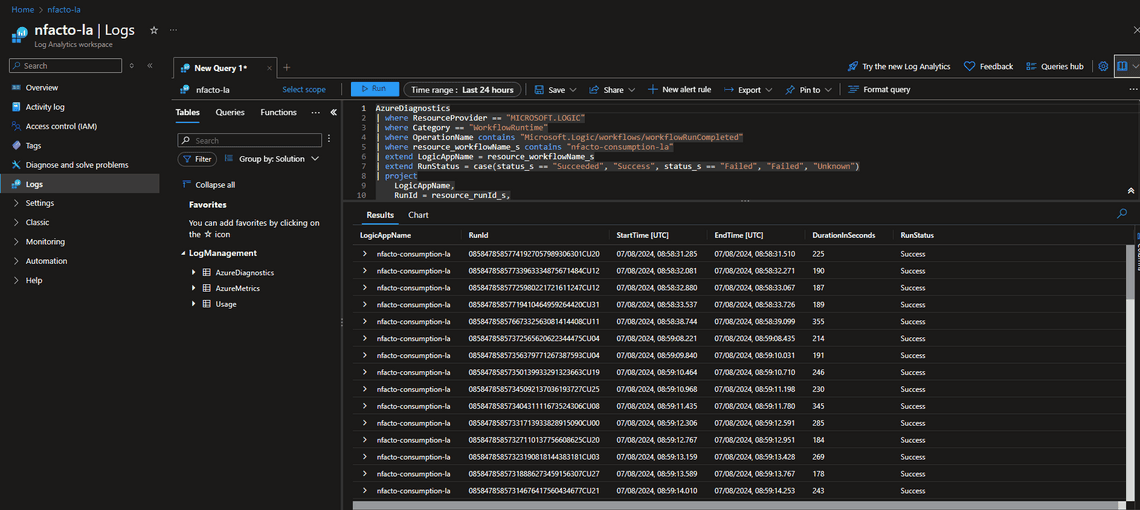

To export the run history and status of your Logic App, you can use Kusto Query Language (KQL) in Azure Log Analytics. Below is a sample query that you can use to retrieve the run history and status for a specific Logic App.

AzureDiagnostics

| where ResourceProvider == "MICROSOFT.LOGIC"

| where Category == "WorkflowRuntime"

| where OperationName contains "Microsoft.Logic/workflows/workflowRunCompleted"

| where resource_workflowName_s contains "<workflow-name>"

| extend LogicAppName = resource_workflowName_s

| extend RunStatus = case(status_s == "Succeeded", "Success", status_s == "Failed", "Failed", "Unknown")

| project

LogicAppName,

RunId = resource_runId_s,

StartTime = startTime_t,

EndTime = endTime_t,

DurationInMs = toint(DurationMs),

RunStatus

| summarize

StartTime = min(StartTime),

EndTime = max(EndTime),

DurationInMs = sum(DurationInMs),

RunStatus = max(RunStatus)

by LogicAppName, RunId

| extend DurationInSeconds = datetime_diff('second', EndTime, StartTime)

| project LogicAppName, RunId, StartTime, EndTime, DurationInSeconds, RunStatus

| order by StartTime asc

Breakdown of the Query

- Filtering by ResourceProvider:

The query begins by filtering the logs to include only those entries where the ResourceProvider is “MICROSOFT.LOGIC”, which corresponds to Azure Logic Apps.

- WorkflowRuntime Category:

The next filter is applied to ensure that only logs from the WorkflowRuntime category are included. This category contains the run history data for Logic Apps.

- OperationName Filter:

The OperationName filter is used to select logs related to the completion of workflow runs, which are crucial for understanding the run history.

- Filtering by Workflow Name:

The resource_workflowName_s filter allows you to specify the exact Logic App you want to analyze.

- Extending and Projecting Columns:

The extend command creates new columns, such as LogicAppName and RunStatus. The RunStatus column is populated based on the status_s field, categorizing it as “Success”, “Failed”, or “Unknown”. The project command is used to select and display the relevant columns: LogicAppName, RunId, StartTime, EndTime, DurationInMs, and RunStatus.

- Summarizing Data:

The summarize command aggregates data by LogicAppName and RunId, showing the minimum start time, maximum end time, total duration, and the status of each run.

- Calculating Duration:

The extend command calculates the duration of each Logic App run in seconds, making it easier to interpret the performance data.

- Ordering the Results:

Finally, the results are ordered by StartTime to provide a chronological view of the Logic App runs.

Exporting the Data Once you’ve run the query in Azure Log Analytics, you can easily export the results to Excel or another tool for further analysis:

- Run the Query: Execute the query in the Log Analytics workspace.

- Export the Results: Click on the “Export” button at the top right of the results pane. You can choose to export the data to Excel or CSV format.

- Analyze the Data: With the data exported, you can now use Excel to perform more detailed analysis, create charts, or prepare reports.

Newsletter

Related Posts

Legal Stuff